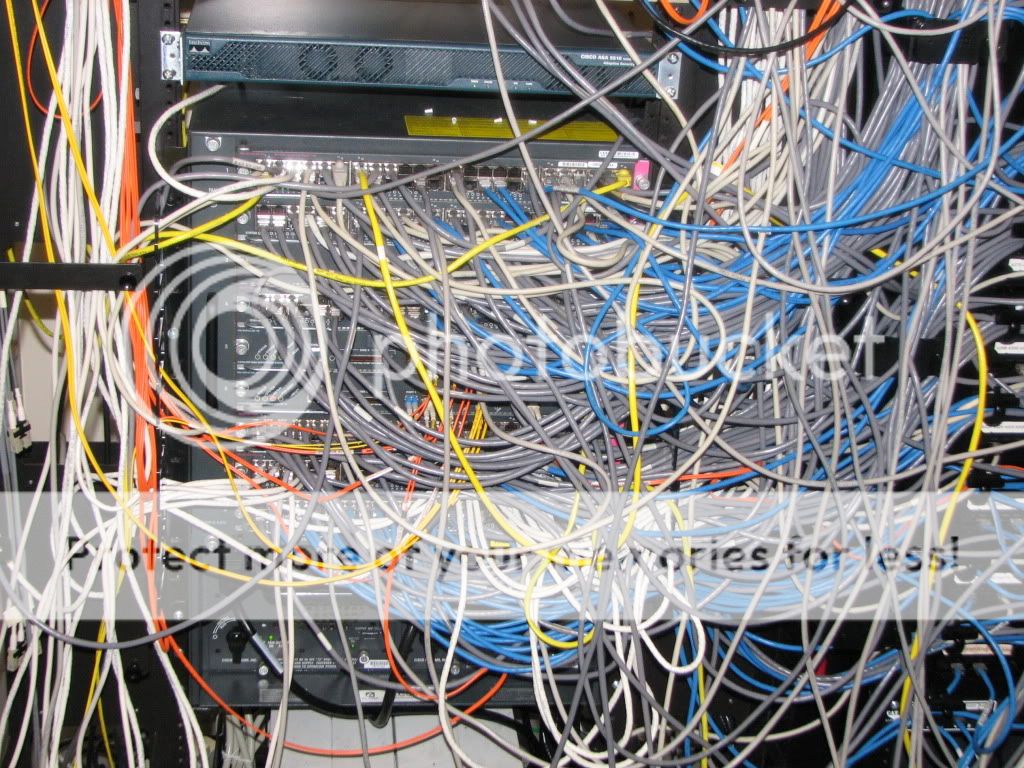

This was that state of affairs that I was tasked with "managing". Somewhere in the lower left corner buried under a mess of cabling is a 9 slot Cisco 6500 series switch with a number of 48 port 10/100 and gigabit cards, a couple fiber cards and I think one or two blanks. On the right was all the patch panels for everything ever made. I think there were about 250 ports, about 150 of which were active.

The time came to replace the switch under lease and I knew I wasn't going to just replace it with the same thing. We decided to go ahead and change from a 9 slot chassis to a 4 slot to handle the fiber and a few gig cards and then do seperate workgroup switches linked by gig copper back to the core for access to the majority of the clients. Bandwidth wasn't really a huge concern - the workgroup switches were essentially daisy-chained together, so all 5 of them shared 2 Gig ports. There wasn't any huge file sharing/moving going on - mostly network file access and email and those few that needed better speeds got hooked directly to the core Gig ports. The impetus for switching to the workgroup switches was wiring managability and being able to keep the runs from patch panel to switch much shorter and more manageable. First, though, we removed all the unused wiring we could - lots of inactive ports that you just couldn't tell because of the spaghetti monster that had taken over:

Here's a close-up of the strategy that we were employing - a 48 port switch sandwiched in between a few sets of patch panels so we could use 6", 1 foot and 2 foot patch cords.

And here is the final result. Performance was the same or better, management was actually possible, wiring was easy to trace, remove, and change as needed.

Anyone else had any similar nightmare wiring they've had to deal with? How did you handle it?