Personal computing discussed

Moderators: renee, Dposcorp, SpotTheCat

Chrispy_ wrote:Thanks for confirming that Lightboost fixes only sample and hold blur, Auxy.

Since this is a thread about V-sync technologies, we don't need yet another Auxy-vs-The-World Lightboost crusade, there are already dozens of those threads scattered all over the forums.

Yeah, Lightboost is good. but it's also irrelevant and off-topic here.

auxy wrote:Chrispy_ wrote:Thanks for confirming that Lightboost fixes only sample and hold blur, Auxy.

Since this is a thread about V-sync technologies, we don't need yet another Auxy-vs-The-World Lightboost crusade, there are already dozens of those threads scattered all over the forums.

Yeah, Lightboost is good. but it's also irrelevant and off-topic here.

You're a jerk. I mentioned it because if G-Sync and Lightboost are incompatible, then I think G-Sync is non-starter for hardcore gamers, which are the only ones likely to pick up the niche hardware required at this time. So, I'm saying it will have to become more available to have any chance of success.

auxy wrote:You're a jerk.

Chrispy_ wrote:auxy wrote:You're a jerk.

Why do you always do this. There's no need to be so aggressive in a discussion, and there's no need to force everyone to think the exact way you think.

I'm just trying to keep this thread on topic, which is about vsync technology. Maybe I called this one too early but there are enough threads discussing lag/latency/120Hz/TN-vs-IPS where it turns into an argument between you and everyone else in the thread.

DeadOfKnight wrote:I'd appreciate it if you both kept these personal jabs to yourselves. Nobody likes having their thread locked because other people can't behave themselves.

Chrispy_ wrote:Just to clarify, both is cumilative.Even a "slow" 6ms panel exhibits less visible ghosting than the 1-frame blur of sample-and-hold.

Chrispy_ wrote:Yes, one way of saying that. It needs further expansion further, however:Yeah, agreed that "blur" as seen by most people is a combination of sample & hold persistance AND pixel response delay but in the interestests of simplification, especially with "1ms" gaming screens, pixel response is insignificant compared to the 16.7ms or 8.3ms of blur caused by sample & hold. It's actually more accurate to always descibe the blur or ghosting on non-strobing screens as "one uwanted frame", no matter what refresh and what pixel response your screen has. Whilst strobing effectively fixes blur or ghosting, it has nothing to do with fluidity of motion, only the perceived sharpness of moving objects your eye follows across a screen.

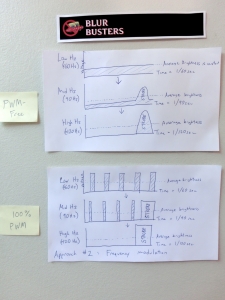

Yep, that's what my diagram shows.A good option for G-Sync with strobing would be to have a constant, traditional backlight for refresh rates under 85Hz, and to strobe only from 85Hz or higher. It would be nice if strobing could be effective at lower frequencies but I don't think flicker is ever desirable

mdrejhon wrote:Yep, that's what my diagram shows.A good option for G-Sync with strobing would be to have a constant, traditional backlight for refresh rates under 85Hz, and to strobe only from 85Hz or higher. It would be nice if strobing could be effective at lower frequencies but I don't think flicker is ever desirable

In other news, Eizo just announced another strobe-backlight monitor, the FG2421:

Techreport thread on Eizo Foris FG2421

Either way, it is great that more and more vendors are recognizing the motion blur benefits of strobing, at least as optional useful modes.

Airmantharp wrote:

JohnC wrote:Airmantharp wrote:

That's not a review - it's a garbage advertising post. Just like everything that this "expert" site contains

sschaem wrote:I obviously know this, if you read a later post --I'm sorry to say this, but both assumption are wrong. Even so you are correct about games over doing it.

The human eye / brain cannot distinct discreet changes at a high time resolution. The human Persistence of vision is said to be ~1/25 of a second.

Its easy to verify, hold you hand, focus on it and shake it like you would say goodbye... Focus on the tip of your fingers : Motion blur on focused objects.

auxy wrote:Read all the thread. :)More to the point that I was making, though, objects under your crosshair -- objects your character is clearly immediately focusing on -- also get blurred. This is unrealistic. Things in motion only get blurry in your vision when your eyes can no longer track them. As long as your eye can track it -- and your eye, or at least my eyes, can track pretty damn fast -- and you stay focused on it, it should stay clear.

auxy wrote:More to the point that I was making, though, objects under your crosshair -- objects your character is clearly immediately focusing on -- also get blurred. This is unrealistic. Things in motion only get blurry in your vision when your eyes can no longer track them.

objects under your crosshair -- objects your character is clearly immediately focusing on -- also get blurred. This is unrealistic

auxy seems correct in this one situation -- only IF you're watching the moving objects, waiting for it to go under the crosshairs.

Auxy wrote:You're a jerk.

Chrispy_ wrote:How are they not the same? You look at places on-screen besides the center of it often, do you?Those two things are not the same, and in the context of motion-blur the focal point of the character is *THE* deciding factor in what should be blurred and what should not.

auxy wrote:Chrispy_ wrote:How are they not the same? You look at places on-screen besides the center of it often, do you?Those two things are not the same, and in the context of motion-blur the focal point of the character is *THE* deciding factor in what should be blurred and what should not.